Music Recommendations #1: Why People Don't Use Them

Summary

As a team of one, I owned a feature of a music streaming service and collaborated with ML data scientists to solve a problem. The problem was low streaming counts for personalized songs despite renovation of the recommendation system.

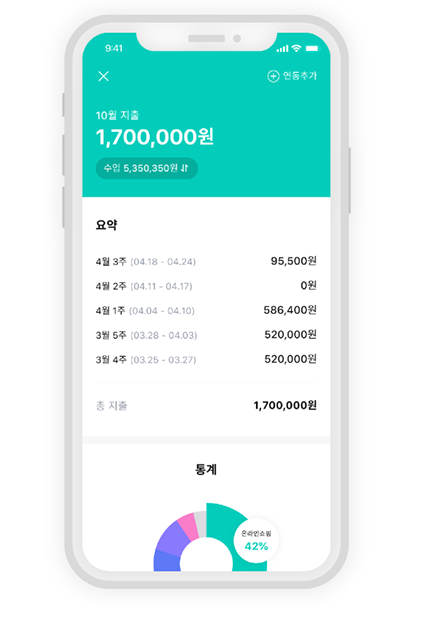

I approached it qualitatively because we needed to understand "why" view counts weren't growing rather than just measuring them. Through heuristic evaluation, journey mapping, and usability testing, I identified that UI complexity was preventing users from discovering recommendations.

My insights led to interface simplification and recognition that users preferred familiar music in certain contexts. Follow-up quantitative research implementing these findings resulted in a 6% increase in streaming counts.

Key Insights

- User experience is fragmented by expertise level: Short-term users struggle with navigation and deplete cognitive resources before discovering recommendations, while long-term users create personal shortcuts that bypass new features entirely.

- Familiarity drives engagement more than novelty: Users prefer recognizable songs in recommendation lists, challenging the team's assumption that diversity in recommendations is always preferable.

- Context significantly influences music selection behavior: Users adopt more conservative selection strategies when choosing music for specific activities (like driving or jogging), gravitating toward familiar songs and avoiding exploration.